Emily Paterson

Learning Experience Designer

Knowing Nature: Evaluating Visitor Learning Outcomes

Duration: June-August 2023

Overview: An evaluation report aimed to uncover the visitor learning outcomes of a traveling Smithsonian exhibition on display at the MSU Museum.

Background:

I was selected as one of two Smithsonian Leadership for Change interns at the MSU Museum, a Smithsonian Affiliate. As a part of our work, we aimed to develop an evaluation plan to uncover the impact of the traveling Smithsonian exhibition “Knowing Nature: Stories of the Boreal Forest” on visitors and their feelings, actions, and takeaways.

With its first appearance at the MSU Museum in April 2023, “Knowing Nature” is an exhibition centered on communicating the rich biodiversity and global importance of the North American Boreal through commissioned objects and the first-person perspectives of their Indigenous peoples.

Audience:

“Knowing Nature” was created by SITES (Smithsonian Institution Traveling Exhibition Service) with the target audience of middle school (grades 6-8) and above. Over the summer, the MSU Museum experiences a larger influx of younger visitors and their parents when MSU students are not on campus. This made it an ideal time to explore the exhibition’s impact on them and their learning.

Goal:

When first embarking on this project, it became clear that we wanted to draw attention to how the exhibition resulted in shifts in attitude, which could be measured by us immediately after a visitor’s experience. With that scope in mind, we decided to focus on the following success metrics:

- Do visitors feel a sense of hope that together we can address climate change?

- Do visitors feel a sense that there are things they can do in their everyday lives that can affect the health of the boreal forest?

- Do visitors feel a sense that there are things they can do to improve the health of their local environment?

With the objective of answering these three questions, our project aimed to provide SITES with a snapshot of the current state of visitor experience, highlighting current behaviors and ultimate takeaways. Through our research-oriented findings, we aimed to provide recommendations to inform the exhibition’s future travels and construction of other SITES exhibitions on similar topics.

Ideation

Early on in the process, we knew we had to work within the time constraints of our internship (an eight-week period with three weeks on-site at the MSU Museum). With this in mind, we wanted to quickly devise a plan for in-person research methods—something we couldn’t do while working remotely.

After evaluating various data collection options, we landed upon a three-step plan:

- Intercept interviews

- Visitor observations

- Post-visit survey

Intercept Interviews:

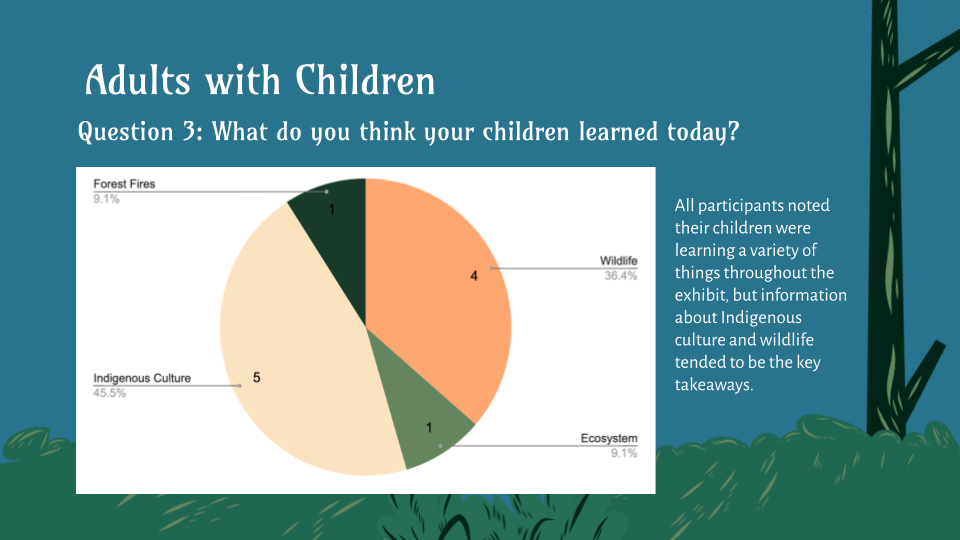

To capture immediate visitor takeaways, we would ask to interview visitors directly after they left the gallery. We developed two short sets of questions: one for adults with children (under 18) and one for adults without children. This decision was inspired by our goal to understand the takeaways of our target audience (younger visitors). To avoid any ethical concerns, we would specifically interview the parents/guardians about their perceived understanding of what their child(ren) took away.

I interviewed 13 out of our 16 participants, asking them a set of 4-8 questions about their experience. Upon recording and transcribing their answers, I then developed two different coding keys to synthesize and analyze their answers to different prompts.

Visitor Observations:

While waiting in the gallery to interview visitors, we also spent time observing their behaviors in the gallery. We knew that we were going to be gathering a lot of qualitative data from our interviews, so we wanted to supplement that with some other forms of data as well.

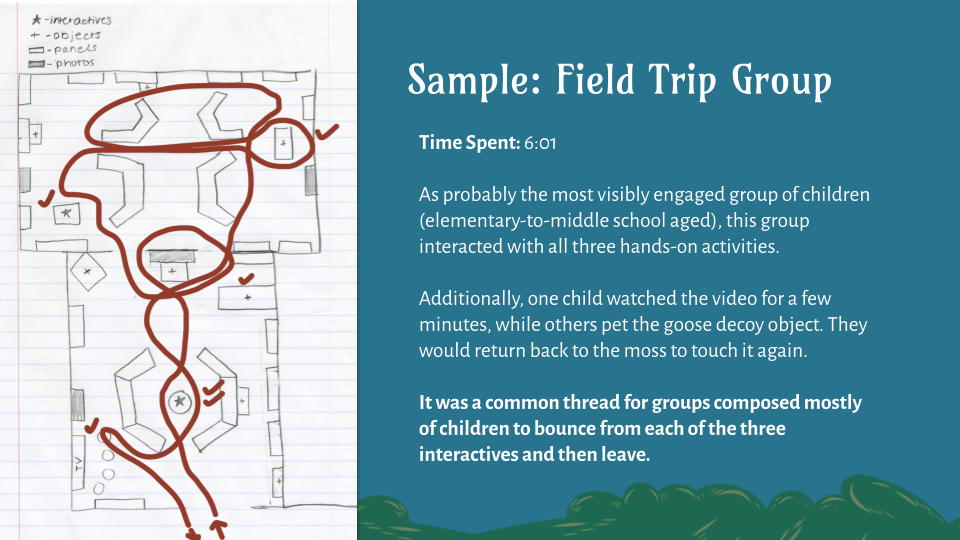

I created a diagram of the entire exhibition layout and then mapped out the paths of visitor groups as they walked through the space. We also timed them (from entrance to exit), made note of interactions with hands-on activities, and wrote down any other unique engagement patterns with the exhibition.

After gathering this data, we constructed a heatmap of all of the overlaid paths of visitors in order to get a sense of how they were traveling through the exhibition and what they were interacting with the most. We also highlighted individual groups of visitors to get a sense of general behavior patterns amongst certain types of groups.

Post-Visit Survey:

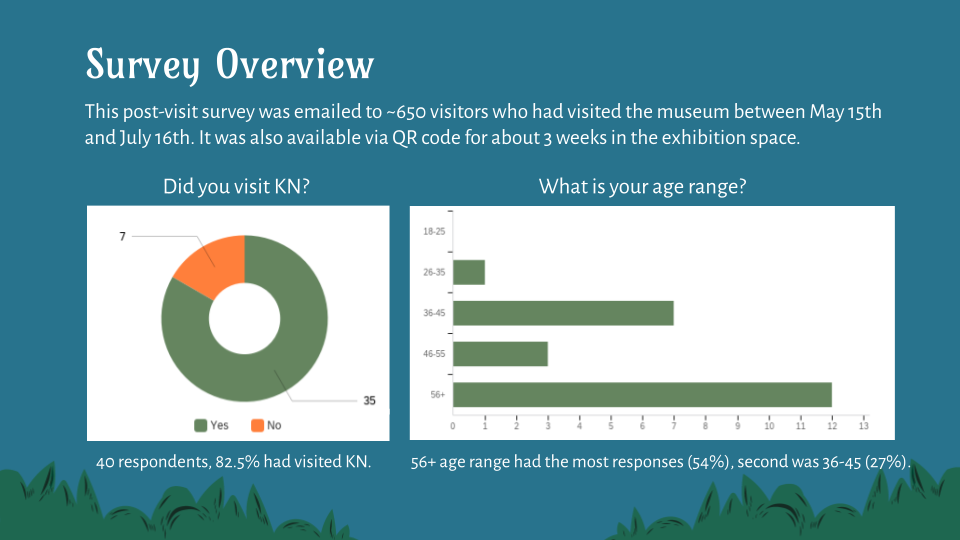

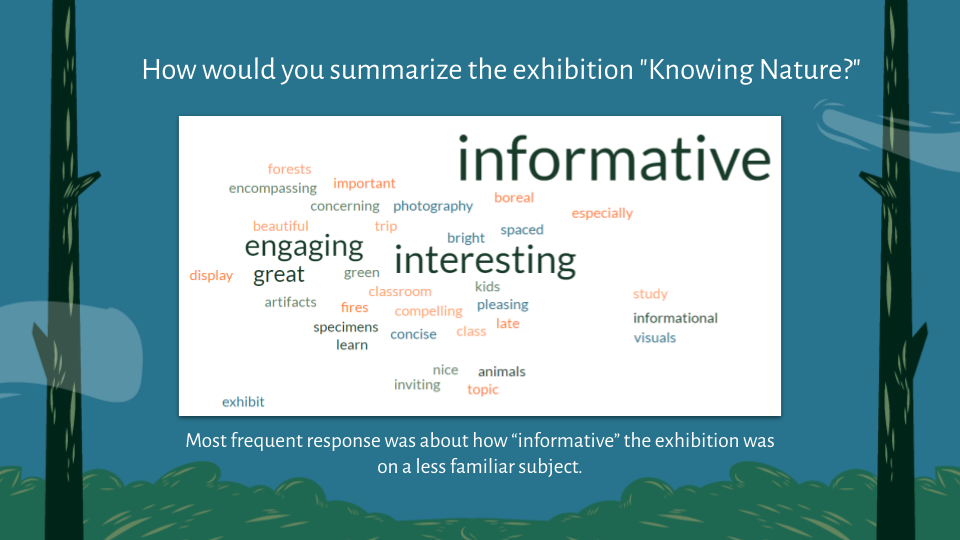

After we had spent our three weeks on site, we wanted to continue measuring visitors’ change in attitude remotely. With this in mind, I constructed an online Qualtrics survey, which was posted in the gallery and emailed by the museum to all visitors for about three weeks.

While the intercept interviews allowed us to speak directly with visitors who may have had a more neutral experience, surveys (in general) tend to get responses from those who either had stronger feelings about their experiences. Our survey respondents also ended up generally being on the older side, which is why it was helpful to specifically seek out adults with children during our time on-site.

Additionally, our survey was crafted out of simpler questions than our intercept interviews. This decision was made to maximize the amount of respondents by avoiding complicated or strenuous questions that may result in an incomplete survey. A majority of these questions were Likert scale responses, which allowed us to gather more quantitative responses that were easy to visualize on a scale.

Our Recommendations:

Short-Term: MSU Museum

- Introduce new interactive: A Boreal Balance (student-created game) is designed to capture a younger audience, encourage closer looking at panels, and foster reflection on behaviors

- Add captions to the video showcasing Indigenous perspectives

- Reset seasonal puzzle interactive more frequently

Medium-Term: Next Venues

- Younger visitors need additional interpretation: A parent guide or audio tour (for early readers) would help with engagement

- Future layout needs more spacing in panel-dense areas: Visitors would often skip the inner panels, while reading the outer ones

- Video should be harder to skip past: Needs a wider screen or place it closer to the entrance

Long-Term: Future Exhibitions

- Less words helps all audiences: Younger and older visitors alike would benefit from less lengthy panel descriptions

- More concrete actions for visitors to take: The scale of environmental destruction is difficult for visitors to comprehend, especially with regards to how they can play a role. Consider more explicit suggestions for visitors of the actions they can do to make a difference.

Impact:

Ultimately, this evaluation project (view the full Google Slides report) had a crucial impact in shaping the design of my other project, A Boreal Balance. I worked on the two simultaneously and I used the insights from my research to inform my design solution. As a main takeaway, I aimed to reconcile the lack of hope that visitors were feeling about climate change, which was the opposite of the exhibition’s goal based on their ideal success metrics.

In the game, I specifically built in a moment for reflection to encourage visitors to think critically about their behaviors and engage with potential solutions (ex: ride a bike, carpool, etc.). Rather than the exhibition’s more subtle and less concrete approach, my path of explicit takeaways aimed to pick up where the exhibition left off and foster a greater sense of action amongst visitors.